I was going through my old diaries with the view to putting the pages into the recycle bin when I came across an entry in 2006 that brought back painful memories (and perhaps tears to my eyes). This was an example when Murphy raised his head and continued doing so with near disastrous consequences – ok, my exaggeration – you can be the judge. The names have been changed to protect the innocent. A warning – this post is a long one, feel free to zone out and zone back in again further down. I have put everything into a timeline since that is what I get from my diary – also racking my memory to fill in gaps in my notes. I don’t have my original notes and detailed documents because all files had to be returned to the company when I got WFR’ed in 2010.

February 2006 – I got outsourced to a well known IT company. Basically we were given a choice, we move over or we leave. Leaving was not really an option for me, so I was the only one in Australia that got outsourced. 1 out of 3 – not bad!

April 2006 – Customer office in Malaysia was moved to another location. This included servers, networks etc, the whole kit and caboodle.

Fast forward to October 2006…

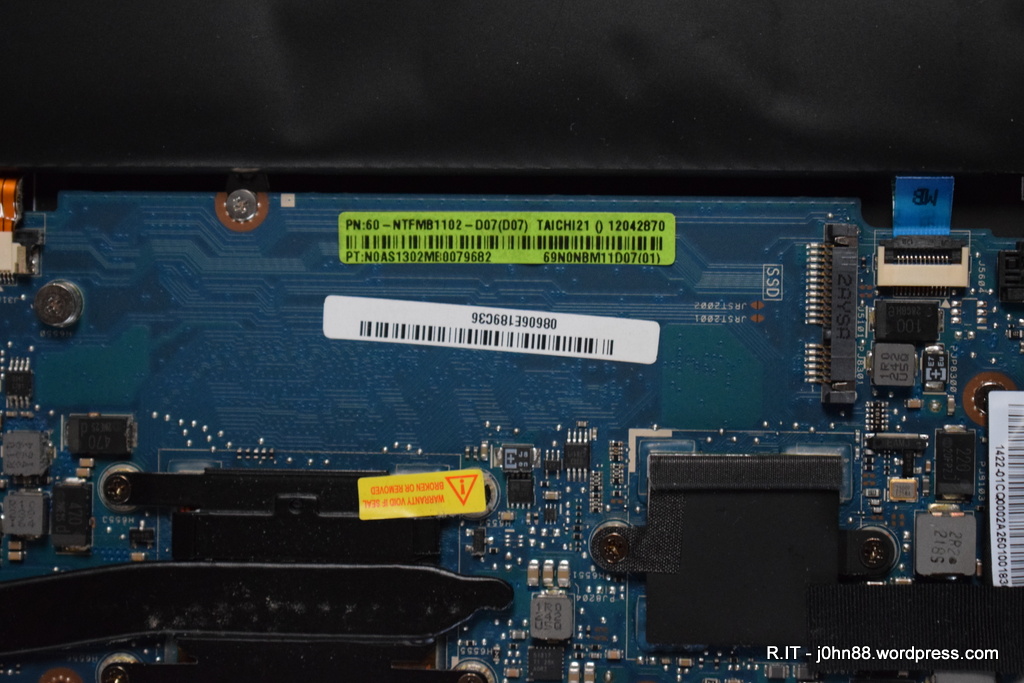

25th October 2006 – Malaysia server NTS4 (not its real name but similar) had shutdown during the afternoon. Yes, NT does stand for Windows NT.

26th October 2006 – During the late afternoon, I hear about the outage – NTS4 was down at 13:09 Malaysia time yesterday. We arrange to get it restarted.

27th October 2006 – We find that the tape backup drive is not connected. Also we find that the disk drive in slot 4 has failed. After some conference calls, we determine that the disk had failed before the site move but apparently nothing had been done about it – [Murphy 1: Customer 0]. This was escalated to get the drive replaced. This drive was part of a 5-disk RAID-5 array. RAID-5 can handle a disk outage but requires replacing the failed drive as soon as possible, otherwise it operates in a degraded state with no fault-tolerance.

28th October 2006 – Engineer is scheduled onsite in Malaysia in the morning. A short time later, I get a call – the NTS4 server is down. Conference calls for the next three hours – it appears that the server is unable to boot as it has lost another drive, although this time the error report indicates that a drive was removed. We check with the engineer – he denies touching anything. All drives are still in the server – so, if the drive was removed, it was put back in – but that was already too late because the array controller now has two drives down, so what really happened. Anyway, I get on the phone to the regional service manager – I tell him that if the drive was removed, the data on the drive should still be intact and I would (with 99% certainly) be able to recreate the array using the data from each disk drive except the one that had failed a long time ago. I also tell him not to let any engineers do anything to the server before I get there.

A few hours later, after more conference calls – we decide that we need to bring services back online. NTS4 was both a SQL database server and a Microsoft Exchange server. I commence copying Exchange installation files from Singapore to another Malaysia server NTS8 which will become the replacement Exchange server.

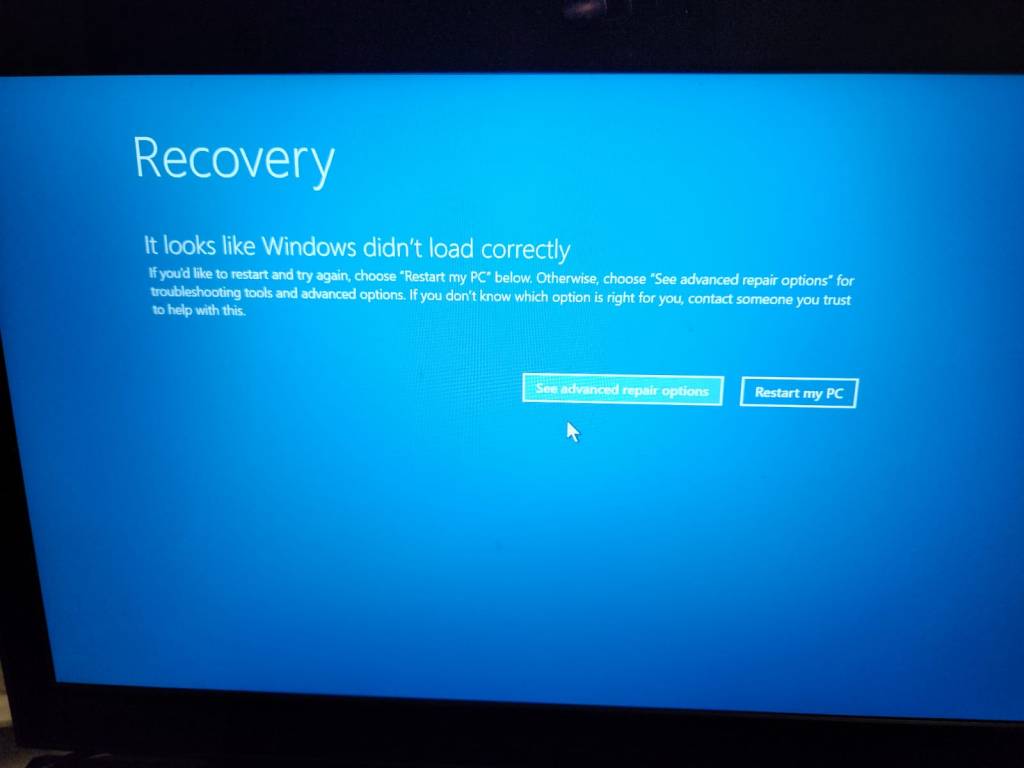

Fast forward a couple of hours – I get a call that the server NTS4 has been fixed. That was when I had the knot in my stomache, shivers down my spine and knowing what comes next – like when you are standing on the edge of an abyss with the wind behind you getting stronger and stronger and nowhere to go.

What happened is a case of pride before prudence (not prejudice – ok, pun). My company’s Wintel Level 3 is based in Malaysia – they are supposed to know everything there is to know about Windows and Intel servers – however, as I found out, they know little about data recovery. Pressure was put on them to resolve a problem – why should an outsider (yes, myself – a newcomer with only 8 months in the company) be the only one that could fix the problem. When this was put to you by a big boss, how can you say that you can’t fix it – of course you can fix it. So what they did was to replace the long time failed drive and the one that had been removed. The array begins rebuilding – smiles all around… Except that the server does not boot – of course it was obvious to me, but I knew then that the data was essentially lost. [Murphy 2: Customer 0]

Ok – no point in crying over spilt milk – the only other course of action (with little hope) I could suggest is to have the server looked at by a data recovery company. My company does not have a data recovery department (surprise) – something that I have suggested, so an external company was required. A suitable company was located in Malaysia – and the server is being packaged up to go to them – cost would be 8,000-20,000 Malaysian Ringgits (irrelevant) and about 1 week turnaround. I finally got to bed on that Saturday at about midnight to try to get some sleep before a scheduled conference call 4 hours later.

29th October 2006 – Looks like that particular Sunday would be full-on. I am right. 04:00 conference call, followed by more work and more calls. I forgot to mention that I am also responsible for Microsoft Exchange 5.5 Level 3 support especially for these emergencies like server restorations. For about 7 hours, I work on installing Exchange 5.5 on NTS8 and finally around 21:30 I get all the mailboxes created. Then spent the next 3 hours getting replication and the X.400 Connector working to the Singapore regional bridgehead. Got to bed at about 1AM.

30th October 2006 – Got up early on a fine Monday morning and started installing the Trend Micro ScanMail and End User Quarantine software for Exchange. Installed Backup and service monitoring agents – yes, I basically install software for the entire infrastructure. Then to prepare for Microsoft SQL – copying install files to Malaysia from Singapore. We would use NTS8 for SQL – the Malaysia customer office uses SQL as the database backend for AccPac accounting software.

31st October 2006 – More work getting SQL installed and finally ready to look at restoring databases. A problem arose trying to read from the NTS4 tapes – it looks like the tape drive wasn’t working for some reason. I would probably have to actually go these, since I am also the level 3 support for Arcserve backup software – my company didn’t really have people who know much about these old applications, and I had been supporting and installing those applications since 1996. Anyway, the Malaysia customer office had email working and accounting database could wait until I get there to restore the NTS4 server from backup tapes.

Over the next 24 hours, I work on a site recovery and contingency plan. I knew that I would have to restore the NTS4 server from tape, so would need to export and import the mailboxes from the restored server to the new server. There were quite a few steps that would be needed in order to affect a good recovery and minimize any further downtime. Towards the end of the job, I expected that there would be a number of late nights involved.

2nd November 2006 – The report back from the data recovery company was not good. They cannot do anything because the array had been reinitialized. There were lots of files that could be recovered, but the main files we are wanting are the Exchange & SQL databases and associated log files – these are very large and much of the data had been lost due to the data striping of the array. I.e. two drives introduced forced a rebuild which is basically a reinitialize. A quarter of the actual data being efectively replaced with zeroes was what I estimated had happened. The server would be returned to the customer site.

6th November 2006 – The NTS8 server is down. Oh no! An IBM engineer is requested since this server is an IBM xSeries server. I thought at the time, that I should start arranging my travel and book flights. I get approval from my manager to fly to Malaysia from Sydney with the purpose of rebuilding and restoring NTS4 and to resolve NTS8. I get a call from the IBM engineer – the server is down due to a bad stripe. [Murphy 3: Customer 0] How can that happen? [It seems that if data within a stripe becomes inconsistent due to media errors, i.e. bad block (or part of) on the hard disk, then the stripe becomes bad. For instance, with three drives in RAID-5 and a block size of 16KB, this means that 32KB has become unavailable – and if this is part of an operating system file, then that could be preventing the server from booting.] Flights arranged, SYD-SIN, SIN-KUL for the next day.

7th November 2006 – Left home at 05:30 heading to the airport for a 08:30 flight to Singapore. Arrived about 13:30 Singapore time and waiting for my 17:00 flight to KL. I get a call from the IBM engineer – he can fix the bad stripe. Really? Ok – how? Delete the array and recreate the array – yeah, right! What about the data? No problem – the data should be fine – no thanks! I forbid him to do this as I am on the way to Malaysia – don’t touch the server until I tell you to! I can be forceful when I need to be. Deleting and recreating the array will definitely lose the data – I was not going to lose two servers in a row, no way, if I could help it! I finally get my flight and arrive in KL and head to the hotel – arriving around 19:30 just in time for dinner – best to eat and get a good night’s sleep because tomorrow would be a long day.

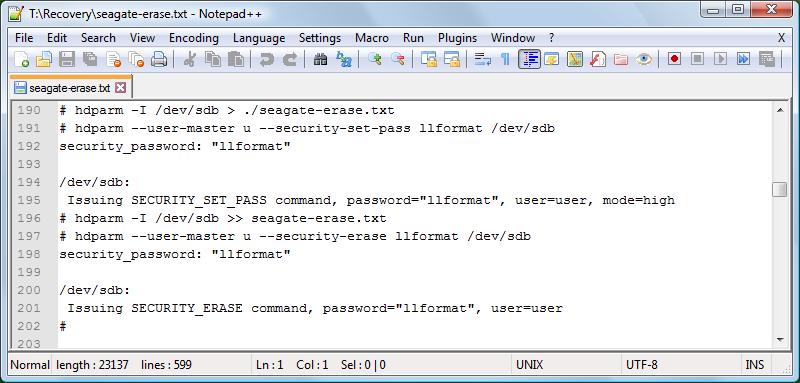

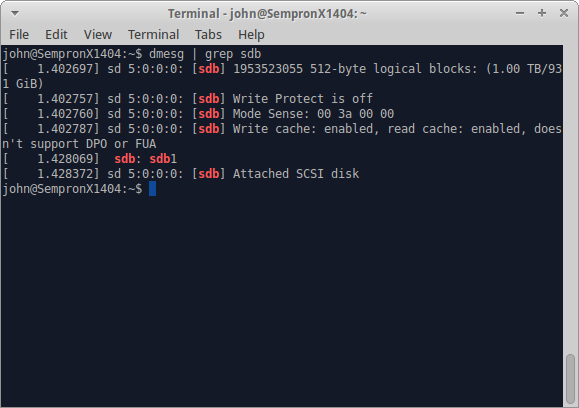

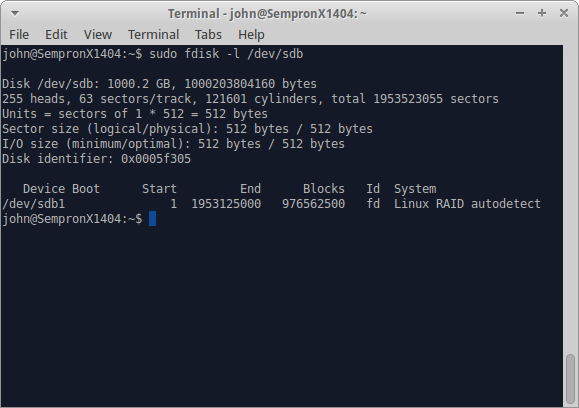

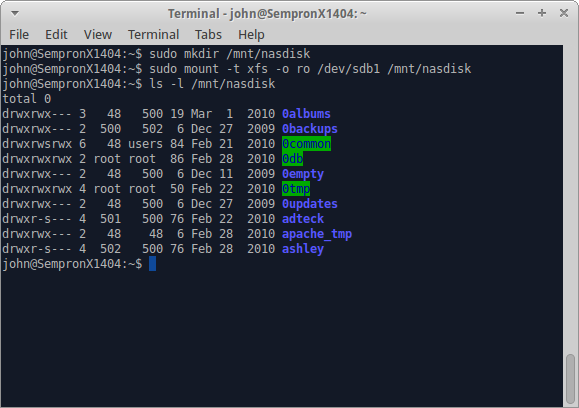

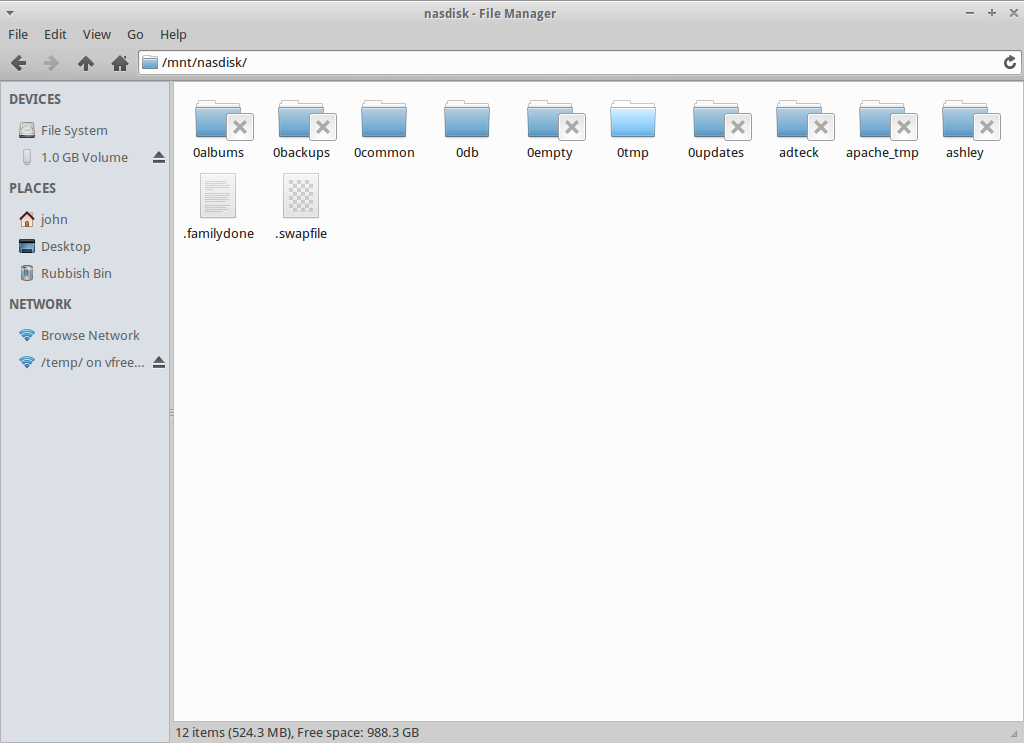

8th November 2006 – Arrived at Malaysia customer site at 08:25. I have a look at both NTS4 and NTS8 servers. I carry a couple of Linux CD’s with me all the time. I planned to boot each server with a Knoppix live CD and run a “cat /etc/fstab” command – this would list the drives and file systems that Knoppix (Linux) recognizes as being available.

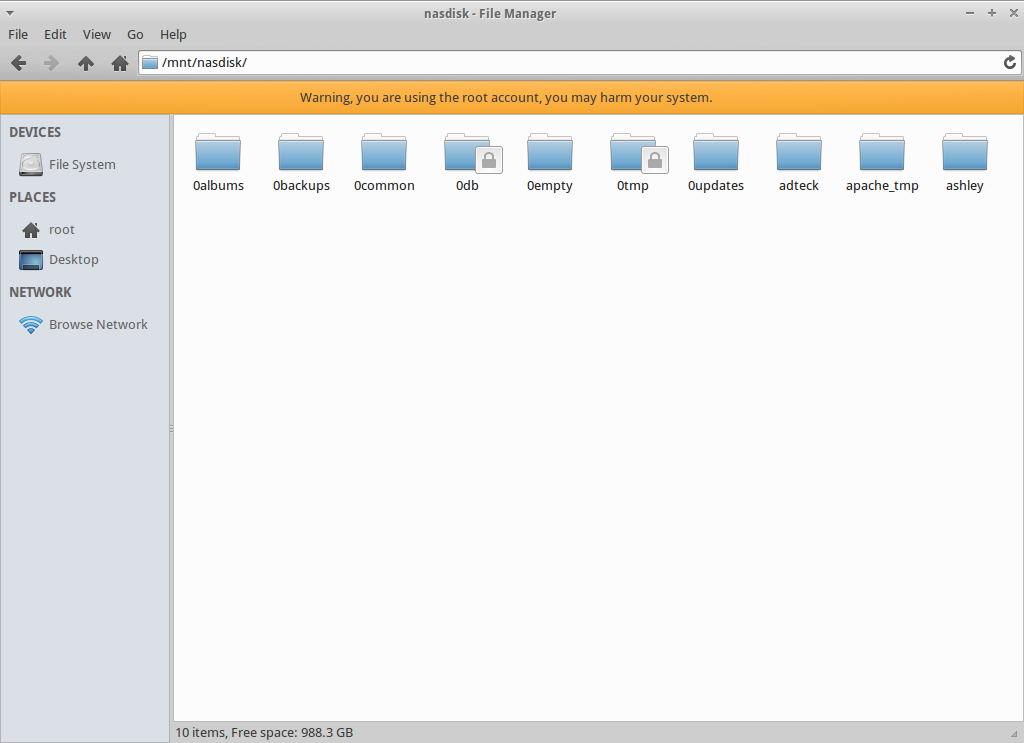

NTS8 – single drive, 2 partitions. /dev/sda1, i.e. C: drive on NTS8 is corrupted at about the 7.5GB point. /dev/sda2, D: drive appears intact – fantastic, because this is where the Exchange server databases and logfiles are stored. This is great news because it means that I can “recover.it“. If I could get those Exchange databases and logfiles copied and restored successfully in the correct manner, the users will have all their email up to the point of failure – that was the best that anyone could hope to achieve. I scrounged around looking for a machine with sufficient storage capacity – I finally found a relatively new desktop with enough space. I enabled Samba and then shared /dev/sda2 and started the copy of the Exchange databases and logfiles, etc to the desktop machine. I also wanted the Arcserve databases and logs. It took a while because the files are quite large – especially Arcserve and even though that Exchange had only been running for approximately a week until it went down.

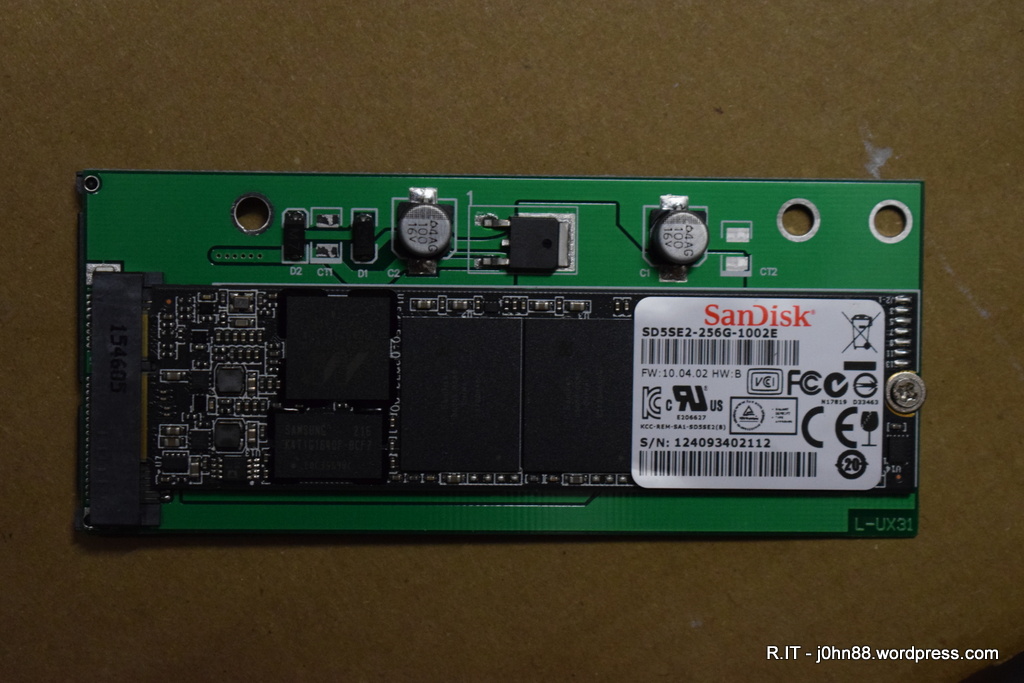

I reconfigured NTS4 to connect all of the disks to the inbuilt SCSI controller instead of the Smart Array controller. Knoppix recognized 4 drives, 18GB, 18GB, 18GB, 36GB – I set up to copy the contents of each disk across the network to my laptop. I would use this data to test my perl script – the one that I would have used to rebuild the data if the disk array had been left as I had requested instead of being interfered with and effectively destroyed by the reinitialization process.

When the copying from NTS8 had completed, I started the copying of the files from the desktop to my usb disk – careful is my middle name, especially when it comes to critical customer data.

I rebooted NTS8 as it was time to “restore.it” and booted from the IBM ServerGuide cd. I erased the disk array and then started the install which would create a new array and then install Windows 2000 – since this is what had been running on NTS8, however it hung up when Setup is starting Windows 2000. Bummer! Anyway, it was late – 20:45, better to get some rest and start afresh in the morning. I called the IBM engineer, explained what I had done, and told him that his services were no longer required. He could go ahead and close the call-out ticket.

9th November 2006 – In the office early again. I worked on NTS8 again, trying to install a couple of times until finally the penny dropped, disconnected the tape drive and tried again. Success – it seemed that during the ServerGuide installation, it would hang trying to detect additional hardware, so best not to give it hardware to find and not know what to do with. Windows 2000 Server installed – great. I then quickly installed the Arcserve backup application and restored the D: drive then restored the C: drive including the system state. This overwrote the fresh installation with what had been backed up during the last full backup which fortunately was the night before the crash. I rebooted when the server was ready, and then stopped all of the Exchange related services. I started the copying of the databases and logfiles from the desktop machine – this should put back into place the files up to the point of failure – at least for the email system.

Done – files are back in place – quick check of the files – they looked ok, file sizes the same as on the desktop. It was necesary to run a recovery process so that the files could be fully integrated into Exchange and the system registry. I ran the following commands – unfortunately I cannot give you a lot of detail on them as it isn’t relevant to this post, but suffice to say that the commands and specific order are necessary – as any Exchange 5.5 level 3 engineer will tell you.

“eseutil /g” – a few errors seen, not a problem as they were expected. “eseutil /r” – soft recovery completed successfully. Started the System Attendant and Directory Service services for Exchange, then logged off and logged on with the Exchange service account. “isinteg -patch” – completed, no errors. Started all remaining Exchange services – voila! Exchange is running. Fixed Trend Micro ScanMail due to the antivirus patches not updating.

All users are informed that email is now accessible and that mail should be at the point of failure – hooray! [Murphy 3: Customer 1]

I then copied the databases for Arcserve so that Arcserve was back to the state at the time of the server crash. All done. It was time to look further at NTS4. I reconfigure the disk drives back to the array controller as by then I had all of the disk contents and can work on rebuilding the server. I installed Windows NT 4.0. While that was happening, I had a look at the tape drive to find out why it was not being recognized. I saw some bent pins in the scsi connector – how did that happen?

The penny dropped – it happens a lot! During the site move in April 2006, they would have disconnected the cables to move the equipment and reconnect. Whoever reconnected the cable to the tape backup unit obviously did so very clumsily and the backup unit was not tested afterwards. [Murphy 4: Customer 1]

10th November 2006 – I had to checkout of the Crystal Crown Hotel – and would move to another hotel – Hilton PJ, later in the day. When booking flights and accommodation on short notice, we could not always get the one hotel for the entire stay. Flights to Singapore and then back to Sydney were reserved. Installed SQL Server 2000 onto NTS8 in preparation for restoration of AccPac databases. A slight (conservatively) hitch had to be resolved, last backup of NTS4 was probably the one before the office move in April – what to do? Ok – not my problem, someone else could worry about that. I continued with my recovery plan to finish the NTS4 reinstallation in preparation for data restoration from tape.

11th November 2006 – The last backup tape of NTS4 (17/04/2014) was merged into the Arcserve database on NTS8 – this was needed before restoration from the tape was possible. Restored two backup sessions to a temporary folder on NTS8. Attempts to restore session 3, resulted in session 2 being found instead – what gives? [Murphy 5: Customer 1]

12th November 2006 – It appears that Arcserve 6.61 when doing a full drive backup would allocate space on the tape based on the expected backup size requirement, however during the backup – some files may be unavailable, hence the actual backup is smaller resulting in slack space on the tape. This was causing a problem with the restore because the tape could not be positioned to session 3 properly. Actually on further analysis, there appeared to be an extra session in between 2 and 3, so that 3 was not real, but trying to restore 3 ended up with 2. Restoring session 4 just failed because if it got to 4, it would see 3 and fail – pulling my hair out just didn’t help. To rule out a tape drive problem, I decided to copy the tape to another different tape media. I used the tapecopy command to copy all sessions from the DLT4 tape to the SDLT1 tape. As it was going to take some time, I began analyzing the data I collected from NTS4 disks before the reinstallation. I updated my perl script so that I could recreate the logical drive – as an academic exercise.

13th November 2006 – The tapecopy had completed. After deleting the tape from the database, I re-merged the tape in Arcserve – to my immediate relief, all backup sessions were visible and in the correct order. [Murphy 5: Customer 2]

I was able to restore the first three sessions comprising of C:, D: and F: then the fourth session being the System Registry was also successful. Next on the list was to restore the SQL databases – another hitch – the restore fails with “no valid destination” – I cannot restore the databases to NTS8 when they were backed up on NTS4. This was apparently a limitation of the backup agent. NTS4 and NTS8 were on different Windows domains – I had to establish a trust between the two domains, then was able to restore from NTS8 directly to NTS4 when restoring to the original location. It wasn’t quite that straightforward as a reboot was involved and the Master database had to be restored first before restores of other SQL databases could work – but it was done. [Murphy 5: Customer 3].

Unfortunately we didn’t really want the SQL databases back on NTS4 because that server was already obsolete, so we decided at the time, that another server NTS5 would become the SQL database server. Since SQL Server was no longer needed on NTS8, it was uninstalled as it was intended to be temporary anyway for the purpose of restoring the databases.

14th November 2006 – It was time for the Exchange database restoration on NTS4. The Exchange site was isolated, to avoid replication – essential when doing an online restoration of old databases. The Exchange database restore was commenced. In the meantime NTS5 was worked on to install SQL Server and Arcserve backup agents. I also did some further work on my perl script for the raid recovery test.

Whew! Still reading this? I did say that this was a long post. Anyway to cut a long story short – in the remaining days of that week, the Exchange databases were restored to NTS4. Exchange was brought up and verified that the mailboxes were intact – which was fantastic. All mailboxes were then exported to pst files using the Exmerge program. These pst files were uploaded to the NTS8 Exchange server. All of the users were happy to get more emails back, but not so happy that the emails between 18/04/2014 to 28/10/2014 was irretrievably lost. Sql databases were also moved to NTS5 and my job in Malaysia was done except for some cleanup actions that could be done remotely. [Murphy 5: Customer 4]

This was an example of some of the things that I encountered during my roving life as an IT consultant and troubleshooter. In those couple of weeks I had to contend with multiple failures involving disk arrays and had to perform server recoveries and restorations under difficult circumstances.

Did we break even at Murphy 5: Customer 4 – doesn’t look like it? Oh yes, the backup tape was six months old – what about the AccPac accounting databases, I can hear you asking? My company had to hire a number of data entry people to input all the accounts for the six months or more based on the accounting printouts that they had – lucky they had hard-copies, right? And yes, the whole accounting process had to be followed, April data entry, then April end of month closure, printout, May data entry… A month or so later, the data entry was completed, and AccPac was rolling ahead! [Murphy 5: Customer 5]

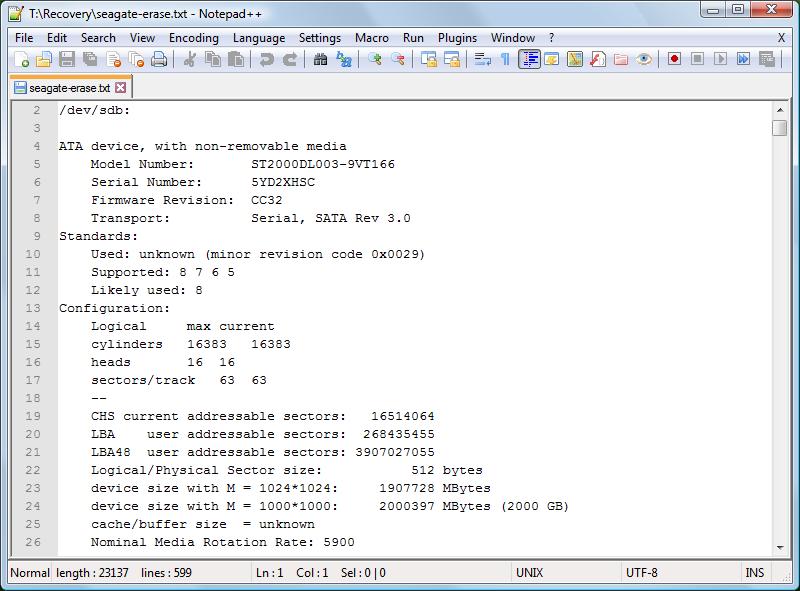

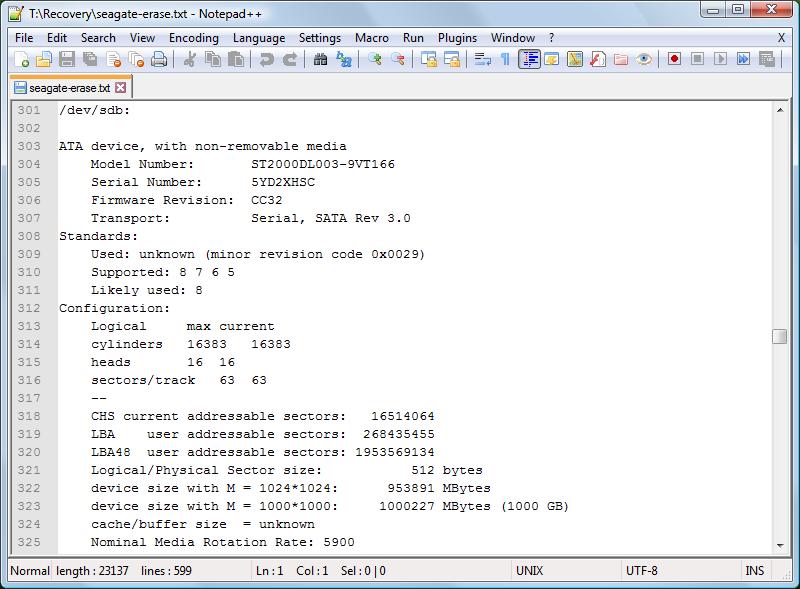

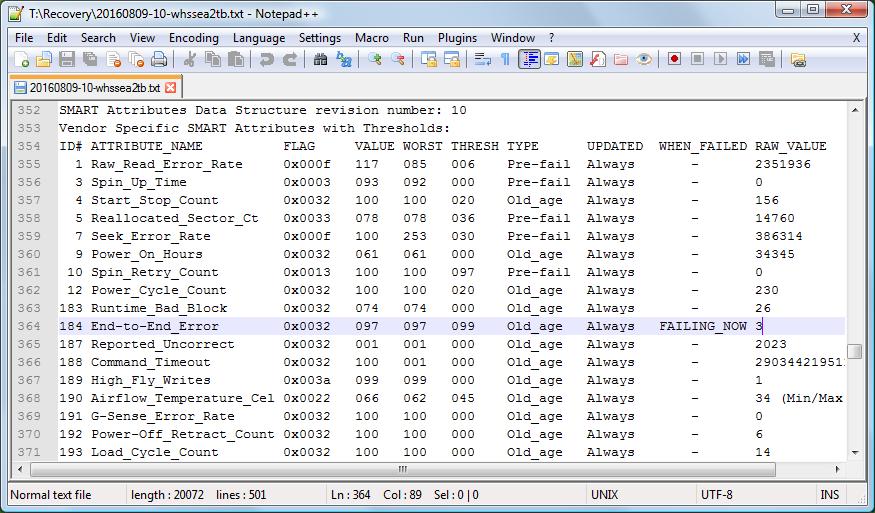

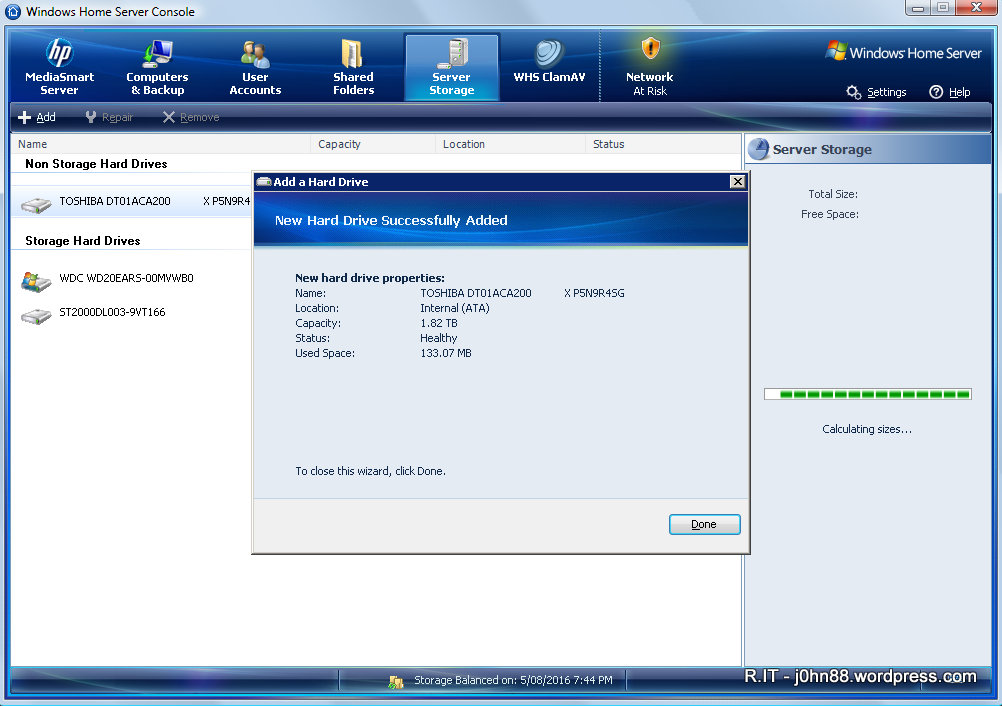

[PS] I feel a bit sorry to put you through all of this, but I hope you understand that an IT problem is not always straight forward. I also tried to keep the relevant parts as it is possible that others may encounter this situation in the future and may find some help in this post. I forgot to mention that I did finish my perl script to recreate the logical drive of the failed array, then during analysis, was able to show conclusively that the array had been reinitialized which was why the data was lost. Further to this, I was able to confirm during testing on equivalent hardware that taking a 5 drive RAID-5 array, I could pull out one drive and lock it away to simulate an old failed drive, then pull out a second drive to crash the array – read the contents of the four available disks, then I could use my perl script to recreate the data on the locked away drive, and also to recreate a logical drive that is the same as converting the array into a single larger drive. All this using a perl scripting language that is over 20 years old – and the script comprising of only a small number of actual commands. For those of you who know perl, you will understand “$b5 = $b1 ^ $b2 ^ $b3 ^ $b4;” – that is the magic line. Everything else was just definitions, reading, writing and looping.

Maybe we could make a movie out of this – but of course, no car chases, no martial arts, no gunplay, no scantily clad women – no fun, right?